SearchEye Publisher Quality Score White Paper

Table of Contents

- Introduction to Common Link Building Challenges

- Why Authority and Traffic Aren’t Enough: The Problem with Traditional Metrics

- Introducing the SearchEye Publisher Quality Score (QS) Algorithm

- Competitive Analysis

- Case Studies / Sample Site Evaluations

- How Agencies Can Use This Data to Drive Better PR Outcomes

- Call to Action: Create a Free Account on SearchEye (2-Week Free Trial)

Executive Summary

SearchEye’s new publisher quality score (QS) algorithm is transforming link building into a quality-first practice for PR and SEO agencies. This white paper introduces a novel “PR co-pilot” approach where link placements are vetted not just on traditional metrics like Domain Authority (DA) or traffic, but on a comprehensive assessment of site quality. By scoring publisher websites across five key categories – Content Quality & Relevance, Business Authenticity, Trust & Legitimacy, User Experience & Design, and Freshness & Maintenance – SearchEye helps agencies avoid low-quality link farms and private blog networks (PBNs) and focus on truly valuable publisher relationships. We compare this approach to traditional link marketplaces (FatJoe, Loganix, Authority Builders, etc.) and provide case studies of real sites with score breakdowns. The findings show that a quality-first strategy leads to better long-term link value, enhanced brand visibility, and smarter PR decisions. Finally, we outline how agencies can leverage SearchEye’s co-pilot and marketplace (with a 2-week free trial) to streamline outreach and consistently achieve high-authority, high-quality backlinks.

Introduction to Common Link Building Challenges

Link building is widely recognized as one of the most impactful yet challenging aspects of SEO and digital PR. In fact, over half of digital marketers (around 52%) regard link building as the most difficult part of SEO. PR and SEO agencies face a myriad of challenges in this arena:

- Identifying Quality Opportunities: The internet is flooded with potential sites for backlinks, but many are low-quality or even fraudulent. Separating legitimate publishers from spammy ones requires extensive vetting.

- Avoiding “Link Farms” and PBNs: Private Blog Networks and link farms often masquerade as genuine sites but exist solely to sell links. They can have deceptively high authority metrics while offering little real value. Agencies risk penalties and wasted budget if they accidentally place links on these platforms.

- Over-Reliance on Simplistic Metrics: Traditional metrics like DA, Domain Rating (DR), or monthly traffic are convenient filters, but they don’t tell the whole story of a site’s quality. High DA or traffic can be artificially inflated. Many SEO professionals learned the hard way that a link from a real, trusted website can far outperform dozens of links from dubious high-DA sites.

- Scalability of Outreach: Vetting each prospective site manually – checking content, design, reputation – is time-consuming. Agencies struggle to scale link-building campaigns while maintaining quality control.

- Client Pressure and Short-Termism: Clients often demand quick results (rankings, referral traffic), pushing agencies toward tactics that favor quantity of links. However, chasing dozens of low-quality links can backfire, as Google’s algorithms and quality guidelines increasingly emphasize link quality over quantity.

Given these challenges, it’s clear why a smarter, more reliable system for evaluating link opportunities is needed. SearchEye’s publisher quality score algorithm was developed to address these pain points, enabling agencies to focus on truly high-quality publisher relationships without the guesswork.

Why Authority and Traffic Aren’t Enough: The Problem with Traditional Metrics

For years, SEO and PR professionals have relied on metrics like DA, DR, and estimated traffic to gauge a website’s value for link building. While these metrics are useful, they are far from foolproof. Here’s why traditional metrics alone can be misleading:

- Manipulated Metrics: It’s relatively easy for bad actors to game DA/DR scores. Many fake “media” sites (often part of PBNs) acquire high authority metrics through artificial means – e.g. buying links from other networks or using expired domains. As a result, a site might boast a DA 50+ while its content is thin and its backlinks come from unrelated or low-quality domains. High traffic numbers can be faked or non-organic as well (for instance, via bots or spammy redirects).

- Lack of Topical Relevance: Raw traffic volume doesn’t indicate who is visiting or why. A site might have 100k monthly visitors, but if that traffic is unrelated to the site’s supposed niche (or generated by clickbait rather than engaged readers), the SEO value of a backlink there is questionable. Traditional metrics don’t capture whether the site’s audience and content are relevant to your industry.

- Quality of Content and User Experience: Domain authority won’t tell you if a site’s articles are well-written and original or scraped and stuffed with keywords. Likewise, traffic stats won’t reveal if the site is riddled with ads and provides a poor user experience. These qualitative factors greatly influence a link’s true value and how search engines perceive it.

- Trust and Legitimacy Signals: Modern SEO goes hand-in-hand with concepts like E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness). Does the site have credible authors? Is it transparent about who runs it? Has it earned mentions or backlinks from other reputable sources? Two sites might both be DA 60, but if one is an established industry publication and the other is a fly-by-night blog with no editorial oversight, the backlink impact and risk are very different.

- Staleness and Maintenance: A high authority score might be a legacy from years past. If a site hasn’t been updated in months or years, its influence may be waning. Or worse, an abandoned site could have been repurposed entirely (a common PBN tactic is to buy an expired high-DA domain). Traditional metrics are slow to reflect these changes, if at all.

Industry experts increasingly recognize that metrics should be just one piece of the puzzle. Even authoritative link marketplaces caution against a blind reliance on DA/DR. For example, Authority Builders (a respected marketplace) notes that a good guest post placement is “less about metrics and more about traffic, the history of the site, and signals that show the value of the site (or not)”. In their vetting, they perform visual checks on site design and content to ensure it’s not an obvious PBN, since an outdated or ugly site raises red flags despite strong metrics. They also examine factors like anchor text patterns and social media activity to spot unnatural link schemes.

In short, traditional metrics like authority and traffic are necessary but not sufficient for evaluating link opportunities. Focusing solely on those numbers invites risk:

- You might invest in links that search engines ultimately discount or penalize (if the site is deemed spammy).

- You could damage your brand’s reputation by associating with low-quality or fraudulent sites.

- You miss out on truly great placements on niche but trustworthy sites simply because their metrics aren’t off-the-charts.

This gap between what metrics show and what really matters for quality is exactly what SearchEye’s publisher quality score algorithm aims to fill.

Introducing the SearchEye Publisher Quality Score (QS) Algorithm

To bring much-needed transparency and rigor to link prospecting, SearchEye has developed a Publisher Quality Score (QS) algorithm. This scoring system evaluates a website across multiple site-wide signals that correlate with trustworthy, high-value publishers. The result is a single, easy-to-use score that agencies can rely on to filter out low-quality sites and zero in on the best opportunities.

Scoring Categories: The QS algorithm is built on five core categories, each addressing a critical aspect of site quality:

- Content Quality & Relevance: This factor examines the depth, originality, and relevance of the content on the site. High-quality sites feature well-written, informative content that is topically relevant to the site’s stated niche or industry. For example, a technology news site should have in-depth tech articles, not just thin 300-word posts on random topics. This category flags issues like spun or duplicated content, keyword-stuffed articles, or topics that are all over the map. Consistent, focused, valuable content drives a high score here, whereas sites filled with generic guest posts or low-effort content will score poorly.

- Business Authenticity: Here the algorithm checks for signs that a real, credible organization or individual is behind the site. Indicators of authenticity include clear About Us pages, author bios with verifiable credentials, contact information (physical addresses, phone numbers), privacy or editorial policies, and overall transparency. A legitimate publisher or business will make itself known. In contrast, PBNs and fake sites often hide their ownership or pretend to be “just a blog” with no real persona behind it. For instance, a site that lacks any contact info or is owned by an anonymous shell company would rate low on authenticity. Conversely, a site that is an official company blog or a known media outlet, with LinkedIn profiles of editors linked, etc., scores high. This category essentially measures “is this site who it claims to be, and is that a real business or entity?”.

- Trust & Legitimacy: This dimension assesses the site’s reputation and external signals of trust. It looks at factors akin to Google’s concept of authority and trustworthiness: Does the site have quality inbound links from other trusted sites? Are people talking about or citing this site elsewhere (forums, social media, news)? Is the content factual and free of spammy elements? Additionally, trust signals on the site itself are checked: references to sources, presence of HTTPS/security, absence of malware or sketchy ads, etc. A news site that has earned links from major publications and consistently publishes accurate information would excel here. On the other hand, a site with unnatural backlink patterns (e.g., lots of links from known link directories or PBNs) or one that is essentially unknown in its supposed industry would score low. Many fake “news” sites in link networks have inflated SEO metrics but low real trust – e.g. high DR but mostly irrelevant backlinks. The QS algorithm catches these discrepancies in the trust & legitimacy score.

- User Experience & Design: Quality sites tend to care about their users. This category scores the look-and-feel, usability, and technical performance of the website. It considers site design aesthetics, mobile responsiveness, navigation structure, and the ratio of content to ads. A high-quality publisher typically has a clean, modern design with easy navigation and original imagery or media. If a site is cluttered with pop-ups or dozens of ads, has broken links, or uses a very outdated template with poor readability, it will be marked down. While design can be subjective, the algorithm uses proxies (like page load speed, mobile-friendly tests, layout consistency) to quantify UX. The underlying assumption is that legitimate publishers invest in their site’s presentation, whereas low-quality sites might be “glorified link farms” that neglect user experience. (Indeed, human vetters often use an “ugly site check” as Authority Builders noted – if it looks neglected, dig deeper to ensure it’s not a PBN.)

- Freshness & Maintenance: The web is dynamic, and a healthy site is one that’s actively maintained. This category evaluates how recently and frequently the site is updated, and whether the content appears current. It checks for recent posts, regular publishing patterns, and up-to-date information. It also flags signs of neglect like very old last posts, missing SSL certificates, or plugin errors. A site that publishes new articles weekly and keeps its software and copyrights updated will score well. If a site hasn’t added new content in a year or shows “Lorem ipsum” filler or outdated 2018 copyright, it likely indicates the site is either abandoned or only sporadically used for link drops – lowering its score. Freshness is important not just for user value but because Google values recently updated, active sites, and links from such sites are less likely to be considered stale or devalued.

Each of these five categories is scored on a granular scale (for example, 0 to 20 points each), and combined into an aggregate Publisher Quality Score. The maximum QS is 100. Importantly, this score is not static – it can adjust as sites improve or degrade. For example, if a site undergoes a redesign and starts publishing higher quality content, its QS will rise; if it stops updating or floods its pages with sponsored posts, its QS will fall. This dynamic nature ensures that the metric stays relevant over time, unlike one-and-done metrics.

Scoring Scale: Based on analysis of thousands of sites, SearchEye provides some general guidelines to interpret QS values:

- 25–40 (Red Zone: Suspected PBNs/Low Quality) – Sites that score in this range exhibit multiple red flags. Typically, these are PBNs, link farms, or extremely low-quality blogs. Content is often thin or irrelevant, the site might have an anonymous owner, and design/trust factors are poor. If a site’s QS is, say, 32, you can expect to find issues like plagiarized content, no real business presence, maybe a templated design used across dozens of sites, etc. Such sites might still have mid-range DA or some traffic, but the holistic quality is lacking. These are sites to generally avoid for link building, as they carry a high risk of being penalized or losing value.

- 40–70 (Yellow Zone: Average to Good Sites) – The bulk of legitimate sites will score somewhere in this middle range. A score of 50 or 60 doesn’t mean the site is bad; it often means the site is acceptable but not exceptional. For instance, a niche hobby blog run by an enthusiast might have original content and decent authenticity (score well in those categories) but maybe a clunky design or very low traffic, pulling the total score into the Fifty/Sixty range. Many small business blogs, local news sites, and industry-specific sites fall here. They can be good targets for links if relevant, but the QS ensures you’re aware that they aren’t top-tier publishers. Think of this as the range of “real but not renowned” sites.

- 70+ (Green Zone: High-Quality Publishers) – Scores above 70 indicate high-caliber websites. These are often established publications, official organizational sites, or leading blogs in a particular domain. They have strong content, clear legitimacy, good UX, and are kept up-to-date. For example, an authoritative news magazine site or a famous industry blog might score in the 75–85 range. Truly elite sites (major news outlets, governmental or academic sites, etc.) could even approach scores in the 90s. A site in this zone is a prime candidate for PR outreach or guest contributions, as it means the site is very likely to confer real SEO value and brand credibility. (It’s worth noting that very few sites score a perfect 100, since that would require near-flawless performance on all fronts.)

It’s helpful to understand that the QS scale is somewhat conservative – a score in the 80s is excellent and relatively rare. Meanwhile, a site scoring in the 20s or low 30s is almost certainly a spammy network site or one that exists solely for SEO manipulation.

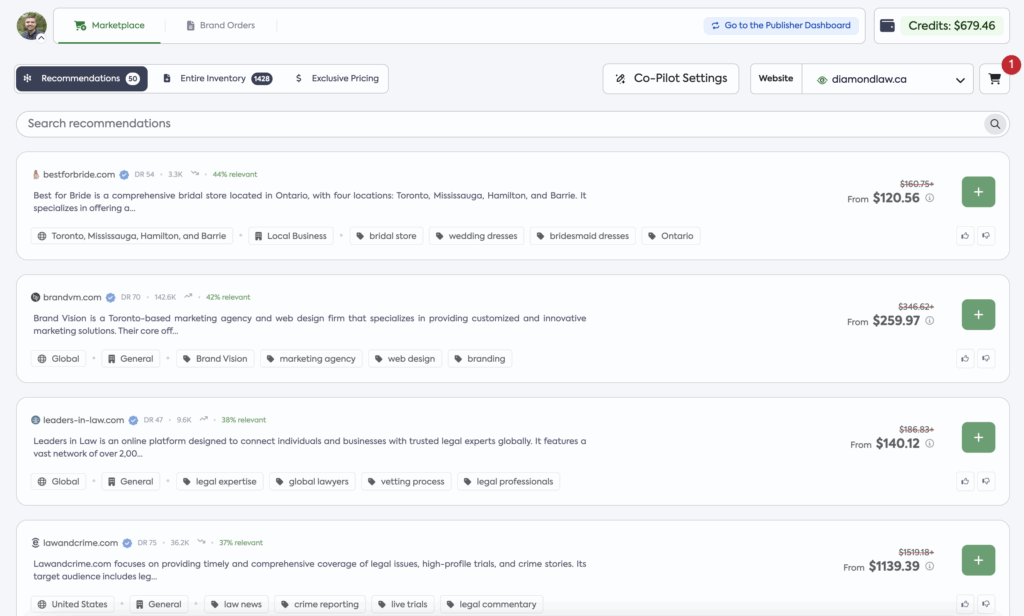

Using QS in the SearchEye Marketplace: SearchEye’s platform integrates the QS algorithm to function as a built-in quality filter and “co-pilot” for PR/link-building campaigns. There are a couple of ways this manifests:

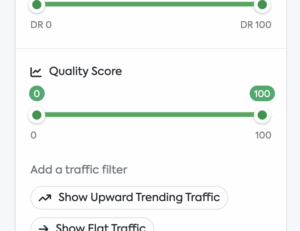

- Quality Score Slider Filter: Users of the marketplace can easily filter available publisher sites by setting a minimum QS. For example, if you only want high-quality placements, you might set the slider to 70+, thereby excluding any opportunities on sites below that threshold. The interface lets you adjust this slider to broaden or tighten the quality criteria, giving you control based on campaign needs. If budget is a concern, you might consider mid-range sites (e.g. 50–60) that are still decent quality; if it’s a high-stakes PR campaign, you push the slider higher. This dynamic filtering is far more powerful than the basic DA or traffic filters on other platforms.

- Auto-Set via Co-Pilot: SearchEye’s platform includes an AI-driven recommendation engine – essentially your digital PR co-pilot. When you input your campaign goals and parameters, the system can automatically suggest an optimal QS threshold. For instance, the co-pilot might detect that you’re in a YMYL (Your Money Your Life) niche like finance or health, where link quality is paramount, and thus auto-set recommendations to only consider, say, 65+ score sites. It might also adjust based on competitiveness: if your target keywords are very competitive, the co-pilot will emphasize higher-quality links. In practice, this means agencies don’t even have to manually fiddle with quality settings – the platform guides them to the right range. Of course, you can override or adjust, but the co-pilot ensures you start on the right footing, avoiding the trap of inadvertently picking a low-quality site because it had an attractive price or DA.

Together, these features support a new kind of link-building marketplace – one that prioritizes quality at the forefront, rather than treating it as an afterthought. The QS makes it simple for PR professionals to enforce quality standards at scale, something that used to require painstaking manual review. Next, we’ll compare how this approach stands out against other popular link-building services.

Competitive Analysis

The link-building marketplace space has been around for a while, populated by services like FatJoe, Loganix, Authority Builders, and others. Many SEO agencies also use general outreach vendors or freelancer networks to secure links. How does SearchEye’s quality-first, co-pilot approach compare? Let’s look at key differences:

1. Quality Vetting vs. Open Inventory: Most traditional marketplaces pride themselves on large inventories of available sites. For example, FatJoe’s blogger outreach boasts thousands of sites, and Authority Builders allows anyone to submit sites for consideration. However, the depth of vetting varies. FatJoe’s platform largely relies on basic criteria (they list sites by DA range, traffic range, and category) and does not provide advanced quality filters or transparency before purchase. In fact, FatJoe obscures the actual domain names until after you order, which means you must trust their metrics blindly. This lack of upfront vetting based on qualitative factors means PBNs or low-tier blogs can slip through as long as their DA/traffic look okay. Loganix and similar services also emphasize metrics like DA/DR and niche relevance, but they do not typically offer a thorough qualitative scoring of sites.

SearchEye, in contrast, curates inventory through the QS algorithm – effectively automating what human vetters might check. This means every site in SearchEye’s marketplace has a quality “badge” on it. Agencies can immediately see, for example, that Site A has a QS 78 (very good) while Site B has 45 (mediocre). Other marketplaces might leave it to the user to inspect a provided list and manually weed out any sites that look like PBNs. With SearchEye, the heavy lifting is done upfront by the scoring system.

2. Transparency of Information: Traditional platforms often give you only a slice of data. In a review of FatJoe’s service, experts noted the platform lacks information on organic traffic trends or geographic traffic breakdown. You might see “Traffic: 5k” but not know if that traffic is consistent or came mostly from one lucky hit on social media. Likewise, you may see “Category: Business” but not realize the site is filled with unrelated posts. Authority Builders does provide more details (they even manually review content quality and reject ~87% of sites), but even their interface doesn’t boil quality down to a single score for the user. It still requires reading through descriptions or trusting that their acceptance criteria did the job.

SearchEye’s QS brings both transparency and simplicity. Instead of digging through various metrics and descriptions to guess if a site is good, you have a clear numeric indicator. Additionally, the five sub-scores (for each quality category) can be made visible in breakdown form, giving insight into why a site scored as it did. For instance, you might see a site with QS 55 – and upon viewing details, realize it scored well on Content and Freshness but poorly on Business Authenticity and Trust. That tells you the site has decent content but maybe is a bit anonymous or not widely trusted, informing how you treat that opportunity. No other marketplace provides this kind of nuanced transparency at a glance.

3. Focus on Long-Term Value vs. Quick Wins: Competitors like Loganix or Authority Builders have traditionally been used for one-off guest post placements – you pay for a link on a site with the desired DA, and that’s it. The incentive for those marketplaces is volume: the more links they sell, the better. While reputable ones will avoid outright spam sites, they may still include borderline sites as long as they meet the metric criteria. There is often no explicit penalty for a marketplace if a link they sold later turns out to be low-quality, beyond perhaps a refund policy. The result is that many marketplaces have inconsistent quality. Some placements yield great results; others end up being “hot garbage” as some frustrated users have put it.

SearchEye’s approach is built with PR and brand reputation in mind, not just SEO juice. By incorporating quality scoring and making it central, the platform essentially bakes in a preference for fewer, higher-quality links. This aligns with Google’s stance that a few strong links from reputable sites outweigh dozens of questionable ones. It also aligns with PR principles: one mention in a respected publication is far more valuable for brand building than ten mentions on obscure blogs. By acting as a “co-pilot,” SearchEye encourages users to pursue strategies that are sustainable and reputation-safe (it may, for example, warn or discourage you if you try to include too many low-score sites in a campaign).

4. Competitive Pricing and Inventory Quality: A common critique of some marketplaces is that they charge premium prices for links on sites that one could get cheaper elsewhere, and often those sites aren’t top quality to begin with. Part of this is the opaqueness – if you can’t see the domain until after payment, you might be overpaying for a relatively low-value site. SearchEye aims to solve this by highlighting quality and value. If a site has a high QS, users can feel more confident the asking price is justified. If a site has low QS but is expensive, it will stick out and likely be avoided, pressuring publishers to improve their sites or prices. In essence, the quality score brings a form of market efficiency to link buying – good sites are recognized and sought after; poor sites cannot hide behind inflated metrics or sales copy.

5. Publisher Vetting Processes: It’s worth noting that some competitors have introduced vetting processes (Authority Builders, for instance, touts a multi-step vetting where they check traffic, content, etc., and claim to reject many sites). This shows that the industry knows quality matters. However, these processes are largely manual and subjective. One marketplace’s team might prioritize different factors than another’s, and human judgment can be inconsistent. SearchEye’s algorithmic approach standardizes the vetting. It also operates continuously – a site’s score can change if the site changes, whereas a manual vet might approve a site once and not review it again for a year, during which the site could deteriorate.

In summary, SearchEye differentiates itself by putting quantitative quality assessment at the core of the platform. Other marketplaces leave quality determination mostly to the user (with basic filters) or behind-the-scenes manual checks. None currently offer a feature like the QS slider or an AI co-pilot to automatically steer campaigns toward quality. Table 1 provides a high-level comparison:

| Feature/Platform | SearchEye (QS) | Typical Marketplaces (FatJoe, Loganix, etc.) |

|---|---|---|

| Quality Scoring | Algorithmic QS (0–100) for each site, with detailed sub-scores in 5 categories. Filterable and used in recommendations. | No composite quality score. Reliance on DA/DR, traffic, and manual inspection by user. |

| Publisher Vetting | Automated vetting via quality algorithm; continuous updates. Manual oversight for flagged issues. | Basic vetting (ensure site has a certain DA, sometimes a traffic minimum). Some (AB) do manual review for obvious PBN signs, but not algorithmic. |

| Transparency | High – users see QS and often the domain name and data upfront. Can choose based on quality threshold. | Varies – some hide domain names until purchase (e.g., FatJoe). Data provided is limited to metrics and brief descriptions. |

| Filters & Targeting | Extensive – filter by QS slider, industry/category, traffic, DA, etc. Co-pilot suggests optimal filters. | Basic – filter by category, DA/DR, traffic range. No quality slider. No AI recommendations (user-driven). |

| Approach to Volume | Emphasizes fewer high-quality links; co-pilot may limit exposure to low-quality inventory. Quality-first even if inventory is smaller. | Emphasizes volume and choice; large inventory including mixed quality. User can potentially choose many links of varying quality. |

| Use Case Focus | Designed as a PR/digital PR co-pilot – aligns link building with brand visibility and long-term SEO value. Great for agencies focusing on content-driven PR. | Designed as SEO vendor services – often used for quick link boosts or bulk link needs. Quality depends on user diligence. |

Table 1: Comparison of SearchEye’s quality-first marketplace vs traditional link building marketplaces.

Ultimately, SearchEye’s unique value is ensuring that “where” you get a link from is as carefully considered as “how many” links you get. The next section illustrates this by examining real websites through the lens of the Publisher Quality Score – highlighting how the algorithm distinguishes between low-quality and high-quality publication sites.

Case Studies / Sample Site Evaluations

To see the Publisher Quality Score algorithm in action, let’s walk through a series of real websites. We will look at their outward characteristics (with visual snapshots) and discuss how each scores across the quality categories. These case studies range from suspected PBNs to high-authority publishers, demonstrating the full spectrum of QS ratings.

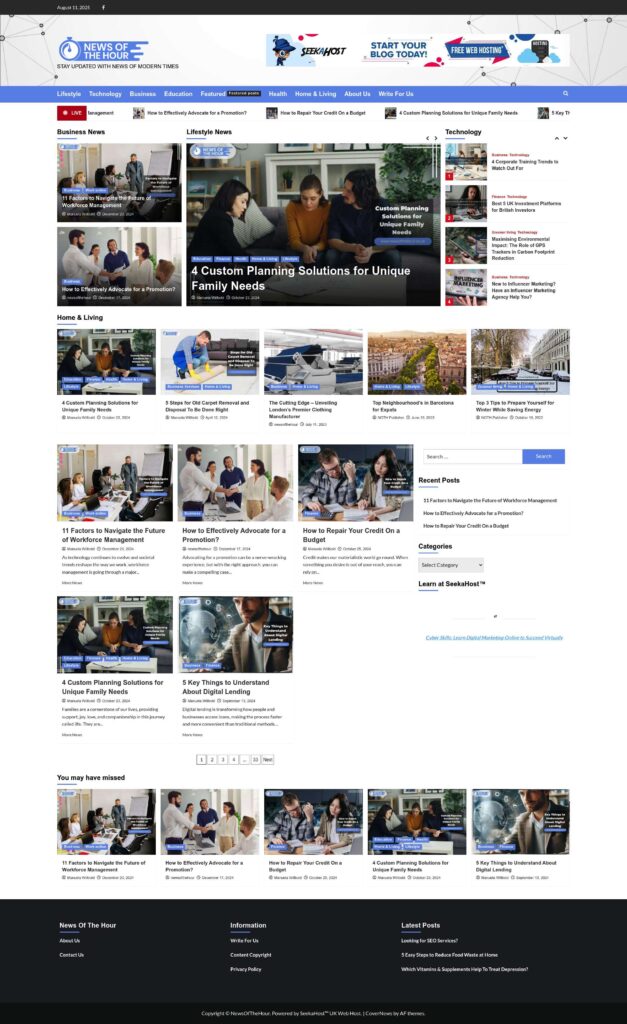

newsofthehour.co.uk – Lifestyle Blog or Link Farm?

Figure: The site newsofthehour.co.uk. Superficially, it looks like a lifestyle news blog, but note the generic content and banner ads – potential indicators of a network site.

Newsofthehour.co.uk presents itself as a “modern lifestyle news” blog, with articles on technology, health, business, etc. At first glance, the site is populated with trending topics and has a passable, if basic, design. However, a closer look reveals hallmarks of a low-quality link site:

- The content, while grammatically okay, is broad and shallow. Topics jump from “How to go Digital in 2021” to “8 Best Virtual Escape Rooms” – suggesting posts are written for SEO value rather than a focused audience. This hurts its Content Quality & Relevance score (many posts appear generic, possibly guest-written solely for backlinks).

- There’s little evidence of a real editorial team or business behind the site. The “About Us” is generic and the site openly invites guest posts and press releases for “any form of PR”. This lack of a Business Authenticity anchor (no identifiable author or owner persona) is a red flag.

- Trust & Legitimacy are low: aside from its own content, the site isn’t referenced by other known outlets, and it even advertises SEO services on its site (indicating the site’s main purpose might be selling SEO value). It resembles what industry studies call a “fake media outlet” – inflated metrics but low real authority.

- The User Experience & Design is mediocre. The site is navigable, but peppered with ads (including a banner for starting your own blog, likely the hosting company behind a network of such sites). The design is a common template used by many small blogs, nothing bespoke or brand-distinctive.

- Freshness & Maintenance: The content is updated periodically (there are posts from 2021, and possibly some more recent ones), but the activity seems sporadic. It doesn’t have the cadence of a true news site. There’s an impression the site is kept just active enough to sell “guest post” slots.

Considering all these factors, SearchEye’s algorithm would score newsofthehour.co.uk in the low range (likely around the mid-30s out of 100). Below is an illustrative breakdown of what its QS might look like:

{

"Content Quality & Relevance": 8,

"Business Authenticity": 4,

"Trust & Legitimacy": 6,

"User Experience & Design": 7,

"Freshness & Maintenance": 6,

"Total QS": 31

}Such a score firmly places it in the “PBN/low-quality” category. In practice, an agency using SearchEye would likely filter this site out (e.g., by setting a minimum QS of 40 or 50). Even if its DA or traffic looked tempting, the QS warns that any link here might carry more risk than reward.

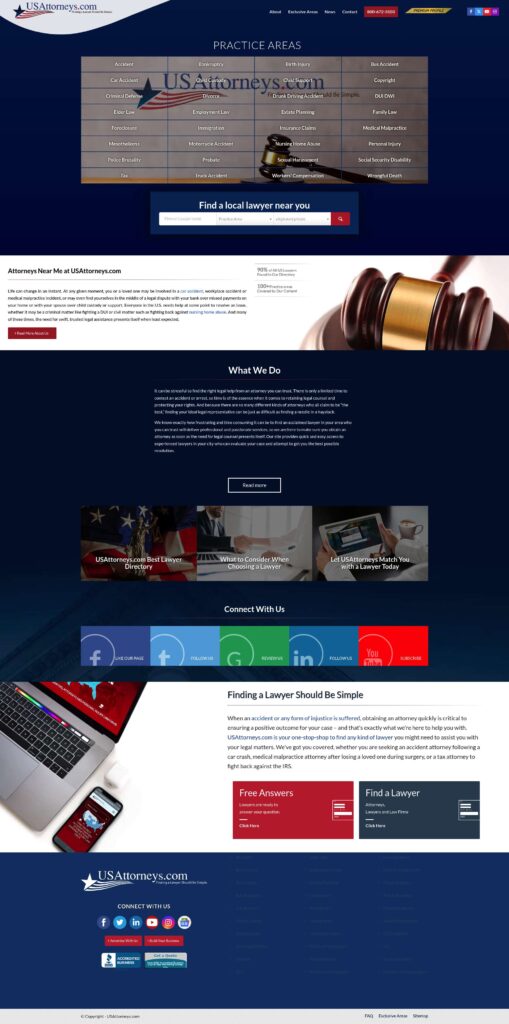

usattorneys.com – High DA Directory with Mixed Quality

Figure: usattorneys.com, a nationwide attorney directory site. It looks professional and has solid domain authority, but how does it fare on content and authenticity?

On the surface, usattorneys.com appears to be a robust legal directory – a site listing attorneys across the U.S., with articles and resources on legal topics. It is an older domain (hence likely has good authority metrics) and the design is relatively clean and professional. However, a deeper evaluation reveals a mid-tier quality profile:

- Content Quality & Relevance: The site does host legal articles and guides, presumably to attract search traffic. The content is relevant to the legal field, though much of it is likely SEO content rather than journalism. Quality is middling; it’s not spammy, but it’s also not particularly insightful or unique (common for directory-affiliated blogs). This category would score moderate.

- Business Authenticity: There is a business model (connecting clients to attorneys), so the site isn’t a faceless blog. It likely has a company behind it and contact info available. However, one might question if usattorneys.com is an impartial resource or if it primarily serves its own network. It does not have the hallmarks of a renowned media organization; it’s more of a lead-gen platform. Authenticity scores might be decent (since it’s a real business with an address) but not as high as a genuine law publication.

- Trust & Legitimacy: This site’s domain is very strong in terms of keyword (exact-match “US Attorneys”), and it likely has garnered many backlinks over the years. That said, those backlinks may be from SEO directories or other legal sites – we’d need to see if reputable organizations refer to it. It doesn’t have the external validation of, say, a bar association or a legal magazine. Trust score would be fair but not excellent.

- User Experience & Design: The design is relatively straightforward and functional. It’s optimized for getting users to find an attorney. There aren’t excessive ads or pop-ups. Navigation is logical with states and practice areas. This likely scores well. The site might feel a bit template-like, but it’s not in a poor UX territory.

- Freshness & Maintenance: Given that the site appears active in posting and keeps its directory updated, it should score well on freshness. It even advertises ongoing link building campaigns on its own pages, indicating regular activity (albeit that suggests they are selling SEO services using their site authority).

Overall, usattorneys.com would probably land in the “Good but not top-tier” range of QS, perhaps around Fifty to 60. It’s not a spammy PBN; it has a legitimate purpose and real content. But it’s also not a high-quality editorial site or news source. For instance, an approximate QS breakdown might be:

{

"Content Quality & Relevance": 12,

"Business Authenticity": 10,

"Trust & Legitimacy": 11,

"User Experience & Design": 15,

"Freshness & Maintenance": 12,

"Total QS": 60

}This score suggests a site that is generally acceptable for link placement, especially if you’re in the legal niche, but an agency might still prefer higher-QS options for marquee content. Notably, SearchEye’s co-pilot might flag this kind of site as suitable for certain SEO goals (niche relevance, high DA) but perhaps not for a high-profile PR campaign due to only moderate trust/authenticity.

ecommercefastlane.com – Authentic Niche Content Site

Figure: The site ecommercefastlane.com. It features podcast content and articles about e-commerce and Shopify, indicating genuine niche focus.

Ecommerce Fastlane is a content site (and podcast) tailored to e-commerce entrepreneurs, particularly those in the Shopify ecosystem. It exudes the vibe of a passion project turned authoritative resource:

- Content Quality & Relevance: Very high. The site contains in-depth podcast episodes with industry guests, long-form articles on e-commerce strategies, and genuinely useful insights. Everything is tightly relevant to its niche (no off-topic filler). This suggests an excellent score in this category; the content is clearly written by someone knowledgeable (or by guest contributors with expertise).

- Business Authenticity: The site is branded around a persona (it’s known to be run by Steve Hutt, a Shopify expert). There is transparency about who is behind the content – a major plus for authenticity. It isn’t a generic “write for us, we accept all topics” blog; it has a focused voice and purpose. This likely scores very well, as the site has an identifiable owner and a credible mission (to help Shopify businesses grow).

- Trust & Legitimacy: Ecommerce Fastlane might not be a household name broadly, but within its niche it’s respected. The podcast has numerous episodes (50k+ downloads as noted on the site), implying a dedicated audience. It likely has earned backlinks from other marketing and e-commerce sites. The presence of real guests and mentions (maybe even Shopify’s ecosystem) gives it legitimacy. This category should score high – not as high as a mainstream news site, but definitely above most personal blogs.

- User Experience & Design: The site’s design is modern, clean, and user-friendly. The site immediately communicates its value proposition (podcast, blog posts) without clutter. It’s mobile-responsive and visually appealing, based on the screenshot. Such polish is typical of a quality publisher, so UX score is high. There are no spammy ads, just relevant content and navigation.

- Freshness & Maintenance: There are recent podcast episodes and posts, indicating it’s actively maintained. The content frequency might be weekly or biweekly, which is solid for a content site of this nature. All signs point to a site that is alive and cared for, so freshness is high.

Given these observations, ecommercefastlane.com would earn a QS in the upper range, likely somewhere around the 70s. It exemplifies a “good site” on the cusp of “high-quality publisher.” An estimated breakdown could be:

{

"Content Quality & Relevance": 16,

"Business Authenticity": 15,

"Trust & Legitimacy": 14,

"User Experience & Design": 16,

"Freshness & Maintenance": 17,

"Total QS": 78

}Such a score is well above the typical cutoff that an agency might set for quality. On SearchEye’s platform, a co-pilot might especially highlight this site for e-commerce related campaigns, since it merges both quality and relevance. A backlink or mention from ecommercefastlane.com would likely provide strong SEO value and also put the brand in front of a targeted, real audience (bonus PR value).

gamerawr.com – Gaming Blog with Hidden Agendas

Figure: A snapshot of gamerawr.com. A gaming news site at first glance, but note the footer – it contains casino and betting links unrelated to gaming.

Gamerawr.com is an interesting case. It presents as a gaming news and review site (with articles on games, consoles, etc.), which should be fine. The design is visually engaging with a modern theme. However, a peek at the site’s footer in the screenshot reveals links to “Non Gamstop casinos”, “Best bonus money” and other gambling-related content. This raises questions about the site’s true purpose:

- Content Quality & Relevance: The visible content about games might actually be decently written and on-topic, which could earn some points. However, the inclusion of gambling promotions suggests the site might be monetized through affiliate schemes that are off-topic. If gaming content is just a front to drive SEO for gambling keywords (a known PBN tactic, using one niche site to boost another niche), relevancy is compromised. The algorithm would catch the presence of those outbound links to unrelated sites and likely ding the content quality/relevance score for mixed signals.

- Business Authenticity: It’s unclear who runs Gamerawr. There’s likely no clear info on whether it’s a hobby blog, a part of a media group, or something else. The presence of gambling affiliate links without clear disclosure is a negative for authenticity. It implies the site owners might be using the site’s authority to sell or trade links under the radar. There is no obvious brand or person taking accountability (e.g., no “Meet the Team”). Authenticity score would be on the low side.

- Trust & Legitimacy: While the site deals with gaming (a generally non-YMYL topic), the fact it links out to gambling sites is a trust red flag. Google and users may see that as a signal of a link network or at least a site willing to post anything for money. Unless Gamerawr has some strong independent citations (which is unlikely if it’s not a known gaming publication), its trust score won’t be great. It’s not likely referenced by reputable gaming communities or known in journalism circles. This is a good example of a site that might have half-decent surface metrics (it might have some traffic from gamers) but under the hood is not fully trustworthy.

- User Experience & Design: On pure design, Gamerawr looks quite good – it has the trappings of a modern blog, slider of articles, categories, etc. Navigating it might be enjoyable for a reader. However, UX also accounts for things like the nature of advertisements or external content. If those gambling links appear site-wide, that’s a UX concern (irrelevant content for a gaming audience). Overall, design gets a relatively good score, but with a small penalty for the slightly deceptive footer links.

- Freshness & Maintenance: If Gamerawr is posting news and game reviews, it likely updates regularly around game release cycles. We’d need to verify, but let’s assume it has a moderate posting schedule (say a few posts per month). It doesn’t look abandoned; it’s in a niche (gaming) that usually has frequent content. So freshness might be fine.

Balancing these, Gamerawr might end up with a QS in the 40s or low 50s. It’s a mixed bag: not overtly spammy at first blush, but with some hidden low-quality aspects. A hypothetical score could be:

{

"Content Quality & Relevance": 12,

"Business Authenticity": 5,

"Trust & Legitimacy": 6,

"User Experience & Design": 14,

"Freshness & Maintenance": 8,

"Total QS": 45

}In SearchEye’s marketplace, this site might appear in the inventory for gaming category opportunities, but its QS would signal caution. An agency might use a slider to exclude anything below 50, thereby skipping Gamerawr. Or, if the agency is less strict, they would at least see the sub-scores and notice the low authenticity/trust, prompting a manual review before deciding to use it.

hackernoon.com – Community-Powered High-Tech Publisher

Figure: The site hackernoon.com, a well-known tech publishing platform. It features a unique design and user-contributed stories, indicating a vibrant community.

Hacker Noon is a popular tech blog and publishing platform where technologists and enthusiasts share articles about programming, startups, blockchain, and more. It’s recognized in the industry as a go-to for tech storytelling:

- Content Quality & Relevance: Very high. HackerNoon’s content is contributed by a large community of writers, but it’s curated and often of good quality. They cover highly relevant topics in software and tech innovation. Articles tend to be informative (though quality can vary since it’s open to contributions). Still, the breadth and depth of content is strong in its niche. It would score well here, especially due to the volume of content and many pieces that have gone viral or earned respect in tech circles.

- Business Authenticity: Hacker Noon is a known brand (it started on Medium, then became independent). It clearly identifies itself, has an editorial team, and a business structure (they even ran crowdfunding with their readers). They have named individuals (the founder, editors) that are public. This transparency and brand identity mean a high authenticity score. It’s not a faceless site; it’s very much community-driven but with accountable leadership.

- Trust & Legitimacy: The site has strong domain authority and trust by virtue of its popularity and longevity. It’s frequently cited by other websites, and some articles rank well in Google for tech queries. While it’s not a traditional news outlet with fact-checkers, it has a reputation for honest, if informal, tech content. Many industry professionals write for HackerNoon, which adds credibility. Google likely views it as a reputable site in the technology domain. Trust score would be high.

- User Experience & Design: HackerNoon has a distinctive design (green and black theme, quirky illustrations). It might not be to everyone’s taste, but it is certainly user-friendly and modern. The site invests in features for readers and contributors (like reading time indicators, topic tags, etc.). There are some ads and sponsored stories (they monetize via native sponsorships), but it’s generally not spammy. UX is polished and creative. High score in this category.

- Freshness & Maintenance: Extremely high. HackerNoon publishes multiple stories every day. It’s one of those sites that is always buzzing with new content. They also keep their site features up-to-date (since they’re tech-savvy). The site is well-maintained and current, so full points on freshness.

Adding it up, hackernoon.com would easily exceed the 70+ threshold. In fact, it might score into the 80s given its strengths. An approximate breakdown:

{

"Content Quality & Relevance": 17,

"Business Authenticity": 18,

"Trust & Legitimacy": 17,

"User Experience & Design": 15,

"Freshness & Maintenance": 20,

"Total QS": 87

}This kind of QS indicates a top-tier publisher. An agency using SearchEye would love to see opportunities on HackerNoon (and indeed, SearchEye might facilitate getting content placed on such a site through its network). The co-pilot might auto-select high QS sites like this for tech clients, knowing that a link here provides both SEO authority and genuine exposure to tech readers. It’s the type of site where a mention not only helps your Google rankings but might drive actual traffic and engagement.

nesta.org.uk – High-Authority Institutional Site

Figure: The website for nesta.org.uk, the official site of Nesta (UK’s Innovation Agency). It has a clean design and clearly represents a reputable institution.

Nesta is a prominent non-profit organization in the UK focused on innovation research and policy. Its website reflects its status as an authoritative institution:

- Content Quality & Relevance: The content consists of research reports, news about innovation initiatives, blog articles by experts, and information on their projects. Quality is exceptionally high – these are often research-backed pieces, well-edited and meaningful to a professional audience. Relevance is tightly aligned with innovation, technology, and social impact. This category would score at or near maximum.

- Business Authenticity: Off the charts – Nesta is a real, public-facing organization (formerly the National Endowment for Science, Technology and the Arts). The site is essentially the digital face of a known entity. It lists staff, has contact information, annual reports, etc. There’s no question about who’s behind this site. Authenticity gets a top score.

- Trust & Legitimacy: Also extremely high. Nesta is cited by government, academic, and industry sources. It has .org.uk domain which often indicates non-profit or official status. Search engines and users inherently trust this site because of what it represents. Any content on Nesta.org.uk is likely considered credible. Its backlink profile likely includes .gov and .edu links, major news outlets referencing its research, etc. This would yield a near-perfect trust score.

- User Experience & Design: The design is very professional, befitting a corporate/institutional site. It’s user-friendly with clear navigation (About, Our Research, etc.). There are no ads; it’s informational and clean. Possibly not flashy, but very functional and accessible. High UX marks for sure.

- Freshness & Maintenance: Nesta’s site is actively maintained. They regularly publish new research reports and updates on their programs. As of the screenshot, it likely shows recent posts or features. There’s no sign of neglect – security is up to date, and everything is polished. Freshness is high.

Unsurprisingly, nesta.org.uk would score in the uppermost QS tier, quite possibly above 90. A conceivable score breakdown:

{

"Content Quality & Relevance": 19,

"Business Authenticity": 20,

"Trust & Legitimacy": 19,

"User Experience & Design": 17,

"Freshness & Maintenance": 18,

"Total QS": 93

}For PR professionals, a link or mention on a site like Nesta is like hitting gold: it confers significant legitimacy. SearchEye’s marketplace might not frequently have opportunities for a site of this caliber (since such institutions rarely do standard “guest posts”), but its co-pilot might guide users toward earned media opportunities involving these high-trust sites (for example, suggesting a data-driven study that could get cited by Nesta or similar outlets). The QS algorithm still keeps track of these sites in its index, and if any collaboration is possible, it underscores how valuable it would be.

In summary of the case studies: The QS algorithm clearly differentiates sites on a qualitative spectrum that traditional metrics alone can’t capture. From Newsofthehour (low-30s QS, clear signs of a PBN) to Nesta (90+ QS, a widely trusted institution), we see how each category contributes to the overall picture:

- Low-quality sites falter across multiple dimensions, not just one. They often lack authenticity, have poor trust signals, and mediocre content.

- Mid-quality sites might do well in some areas (e.g., good UX, active content) but lag in others (maybe weaker trust or unclear business model).

- Top-quality sites fire on all cylinders – content, authenticity, trust, UX, freshness – which justifies why links from them are so valuable.

Agencies using SearchEye can leverage these scores to make informed decisions at a glance, saving time and avoiding costly mistakes of engaging with subpar publishers.

How Agencies Can Use This Data to Drive Better PR Outcomes

The introduction of a Publisher Quality Score into the link-building and digital PR workflow is more than just a new metric – it’s a paradigm shift in how agencies plan and execute campaigns. Here are several ways agencies can harness this data to achieve superior PR outcomes:

1. Selecting Better Link Opportunities (Quality over Quantity): As echoed by Google’s own Search Advocate John Mueller, “quality trumps quantity in SEO inbound link building”. With QS at their fingertips, agencies can confidently prioritize a few high-quality placements instead of scattering budget across many questionable sites. This means when reporting to clients, the focus shifts to the caliber of placements (“We secured a feature on a high-quality site with QS 80”) rather than just the count of links. Over time, those quality links tend to produce better SEO results (sustained ranking improvements) and less volatility with algorithm updates. They also mitigate risk – there’s a far lower chance of incurring a penalty or manual action when your backlinks come from legit, well-regarded publishers, as opposed to having a profile riddled with low-QS site links.

2. Enhancing Brand Visibility and Credibility: From a PR perspective, where you are mentioned can be as important as how you are mentioned. A company press release picked up by a spammy “press release syndicator” site (QS 30s) will yield little trust or awareness. In contrast, getting a mention in a respected industry blog or news site (QS 70+) not only boosts SEO but can be showcased in the client’s marketing (“As seen in [High-Quality Site]”). Agencies can use QS to filter for publications that have real readership and authority, thus integrating SEO-driven link building with classic PR aims. The result is a more cohesive strategy where every link also serves as a positive brand mention.

3. Data-Driven Client Education and Justification: Quality scoring provides a clear, data-driven way to communicate with clients about link choices. In the past, conversations might have been: “We chose Site X because it has DA 50.” Now it can be: “We chose Site X because it scored 75 on our quality index, meaning it’s among the top tier of sites in content and trustworthiness. Here’s the breakdown showing its strengths in content and design.” This not only justifies budget allocation (clients understand why a placement might cost more if the quality is verified) but also educates them on why some seemingly “okay” sites were rejected (e.g., “Site Y had decent traffic but only QS 40 – it had signs of low trust, so we passed to protect your brand”). Such transparency builds trust between agencies and clients and positions the agency as utilizing cutting-edge tools for the client’s benefit.

4. Streamlining Outreach with the PR Co-Pilot: The QS isn’t just a score; it’s embedded in an intelligent system (the SearchEye co-pilot). Agencies can input their campaign goals (target audience, niche, SEO objectives) and let the platform suggest or automatically curate opportunities that fit both the topical needs and quality threshold. This is akin to having a junior analyst pre-vet a list of publications for you in seconds. For example, for a fintech client, the co-pilot might filter out all sites below QS 60 and highlight a handful of finance blogs and news sites that are safe bets. This dramatically reduces the time spent sifting through lists of prospects, freeing up the PR team to focus on crafting pitches and content for the high-value targets. It’s the difference between manual prospecting in a haystack versus guided targeting with radar assistance.

5. Informing Content Strategy and Angle of Approach: The detailed breakdown of QS categories can even guide how you approach a high-quality site. If the algorithm indicates a site has superb content quality but slightly lower freshness, one could deduce that perhaps they need more frequent content – maybe your guest contribution or story could fill that gap. If a site has a perfect UX score but moderate business authenticity, maybe it’s a great blog that could use an expert guest post to bolster expertise – an opportunity for your client to be that expert. In this way, the quality audit of a publication can inspire the pitch angle. Agencies can say, “We noticed you haven’t covered Topic X recently (freshness gap) – our client has new data on this and we think it’d resonate with your audience.” This approach increases success rates for placements on coveted sites.

6. Building Long-Term Relationships with Publishers: When agencies consistently prioritize high-QS publishers, they naturally tend to build relationships with the real journalists and editors behind those sites. Over time, this network of quality publishers becomes an invaluable asset – it’s essentially what PR has always been about, but now reinforced by data. Instead of one-off link transactions (common with lower-tier sites), agencies can move towards ongoing collaborations: e.g., a tech blog (QS 80) that the agency contributes to every quarter with expert commentary, or a news site that invites the agency’s client for thought leadership pieces. The result is a stable of backlinks that grow organically and remain resilient, as opposed to a churn of links that might drop or get disavowed later.

7. Measuring Campaign Success Beyond Link Count: With QS, agencies can introduce new KPIs for their campaigns that better reflect value. For instance, Average QS of Acquired Links could be a metric, or Percentage of Links from QS 70+ sites. These quality-oriented KPIs can supplement traditional metrics like number of links or increases in organic traffic. Over time, agencies might find (and can prove) that a smaller number of high-QS links moves the needle more for SEO rankings than a larger number of low-QS links, thereby reinforcing the strategy. Additionally, high-quality links often lead to indirect benefits like referral traffic, social shares, and inclusion in Google’s own features (e.g., being cited in Google’s AI-generated summaries or news carousels due to the site’s authority). These are bonuses that impress clients but are only attainable with quality sources.

In essence, agencies that leverage SearchEye’s quality data become more strategic and outcome-focused. They are not just link builders; they become digital PR consultants who ensure that every link is also a positive brand association and a step towards thought leadership. This aligns perfectly with the direction of modern SEO – which is less about hacks and volume, and more about earning genuine authority. By adopting the QS framework, agencies future-proof their link-building efforts against algorithm changes (since regardless of how Google’s algorithm shifts, having links from great websites will never be a bad thing) and against market skepticism (as audiences and clients alike grow savvier, they can tell a good website from a bad one).

Call to Action: Create a Free Account on SearchEye (2-Week Free Trial)

The evolving landscape of SEO and PR demands tools that put quality first. SearchEye’s publisher quality score algorithm and PR co-pilot are here to help you make that leap with confidence. We’ve seen how this approach can elevate your link-building strategy – now it’s time to experience it firsthand.

👉 Ready to supercharge your PR and SEO campaigns with quality insights? Sign up for a free account on SearchEye today and take advantage of a 2-week free trial. You’ll get full access to the SearchEye marketplace and co-pilot features, allowing you to:

- Discover vetted, high-quality publishing opportunities tailored to your niche.

- Play with the QS slider filter to see the difference it makes when you set higher quality thresholds – watch how the list of available sites transforms.

- View detailed score breakdowns for publisher sites and identify perfect fits for your clients (or weed out the risky ones).

- Experiment with the co-pilot, inputting a campaign and letting AI suggest the optimal strategy and sites.

- Collaborate with confidence – invite team members to your account so your whole agency can align on a quality-first approach.

The two-week trial is fully functional, so you can even start a pilot campaign and see results. No long-term commitment is required – we’re confident that once you see the efficiency gains and the caliber of links you can secure with SearchEye, you’ll wonder how you managed link building before it.

Join the movement towards smarter link building and better PR. Quality backlinks and brand mentions are within reach with the right co-pilot by your side. Create your free SearchEye account now, elevate your agency’s performance, and deliver the results your clients will love – all while future-proofing your strategy in the process.

High-quality link building doesn’t have to be hard. Let SearchEye guide you to the top-tier opportunities that truly move the needle. 🚀

Frequently

Asked

Questions

DA/DR and traffic are volume metrics; QS is a quality metric for the publisher. QS evaluates content relevance and depth, business authenticity, trust/legitimacy, UX/design, and freshness/maintenance. It helps agencies avoid inflated metrics (e.g., high DA but thin content or anonymous ownership) and prioritize real publishers that build real businesses and audiences.

QS penalizes patterns common to networks: unfocused categories, generic/guest-post churn, anonymous ownership, excessive affiliate/outbound promos, weak trust signals, and neglected UX. Co-Pilot can auto-set conservative QS thresholds for sensitive niches (e.g., finance/health) and surface only vetted, trending publishers—so your team spends time pitching sites with real legitimacy.

Use the marketplace slider to set minimum QS. As a rule of thumb: under 40 = low-quality/PBN risk (generally avoid), 40–70 = acceptable to good (use when highly relevant or budget-sensitive), 70+ = high-quality publishers ideal for marquee placements. Many agencies target an average campaign QS of ≥60, then layer relevance and budget.

Start with QS 70+ for flagship stories and digital PR, then selectively expand to 55–70 when a site is on-topic, audience-fit, and time-sensitive. Use the 12-month trending-traffic view to confirm momentum. Keep a hard floor (e.g., QS 50 or 60) to preserve brand safety and long-term link equity.